The number of instances to use in your cluster is application-dependent and should be based on both the amount of resources required to store and process your data and the acceptable amount of time for your job to complete. As a general guideline, we recommend that you limit 60% of your disk space to storing the data you will be processing, leaving the rest for intermediate output. Hence, given 3x replication on HDFS, if you were looking to process 5 TB on m1.xlarge instances, which have 1,690 GB of disk space, we recommend your cluster contains at least (5 TB * 3) / (1,690 GB * .6) = 15 m1.xlarge core nodes. You may want to increase this number if your job generates a high amount of intermediate data or has significant I/O requirements. You may also want to include additional task nodes to improve processing performance. See Amazon EC2 Instance Types for details on local instance storage for each instance type configuration.

Thursday 5 February 2015

Q: How do I select the right number of instances for my cluster?

The number of instances to use in your cluster is application-dependent and should be based on both the amount of resources required to store and process your data and the acceptable amount of time for your job to complete. As a general guideline, we recommend that you limit 60% of your disk space to storing the data you will be processing, leaving the rest for intermediate output. Hence, given 3x replication on HDFS, if you were looking to process 5 TB on m1.xlarge instances, which have 1,690 GB of disk space, we recommend your cluster contains at least (5 TB * 3) / (1,690 GB * .6) = 15 m1.xlarge core nodes. You may want to increase this number if your job generates a high amount of intermediate data or has significant I/O requirements. You may also want to include additional task nodes to improve processing performance. See Amazon EC2 Instance Types for details on local instance storage for each instance type configuration.

Wednesday 4 February 2015

Amazon S3 Distcp configuration

Amazon S3 (Simple Storage Service) is a data storage service. You are billed monthly for storage and data transfer. Transfer between S3 and AmazonEC2 is free. This makes use of S3 attractive for Hadoop users who run clusters on EC2.

Hadoop provides two filesystems that use S3.

- S3 Native FileSystem (URI scheme: s3n)

- A native filesystem for reading and writing regular files on S3. The advantage of this filesystem is that you can access files on S3 that were written with other tools. Conversely, other tools can access files written using Hadoop. The disadvantage is the 5GB limit on file size imposed by S3.

- S3 Block FileSystem (URI scheme: s3)

- A block-based filesystem backed by S3. Files are stored as blocks, just like they are in HDFS. This permits efficient implementation of renames. This filesystem requires you to dedicate a bucket for the filesystem - you should not use an existing bucket containing files, or write other files to the same bucket. The files stored by this filesystem can be larger than 5GB, but they are not interoperable with other S3 tools.

S3 can be used as a convenient repository for data input to and output for analytics applications using either S3 filesystem. Data in S3 outlasts Hadoop clusters on EC2, so they should be where persistent data must be kept.

Note

that by using S3 as an input you lose the data locality optimization,

which may be significant. The general best practise is to copy in data

using distcp at the start of a workflow, then copy it out at the end, using the transient HDFS in between.

History

- The S3 block filesystem was introduced in Hadoop 0.10.0 (HADOOP-574), but this had a few bugs so you should use Hadoop 0.10.1 or later.

- The S3 native filesystem was introduced in Hadoop 0.18.0 (HADOOP-930) and rename support was added in Hadoop 0.19.0 (HADOOP-3361).

Why you cannot use S3 as a replacement for HDFS

You cannot use either of the S3 filesystems as a drop-in replacement for HDFS. Amazon S3 is an "object store" with

- eventual consistency: changes made by one application (creation, updates and deletions) will not be visible until some undefined time.

- s3n: non-atomic rename and delete operations. Renaming or deleting large directories takes time proportional to the number of entries -and visible to other processes during this time, and indeed, until the eventual consistency has been resolved.

S3 is not a filesystem. The Hadoop S3 filesystem bindings make it pretend to be a filesystem, but it is not. It can act

as a source of data, and as a destination -though in the latter case,

you must remember that the output may not be immediately visible.

Configuring to use s3/ s3n filesystems

Edit your core-site.xml file to include your S3 keys

<property> <name>fs.s3.awsAccessKeyId</name> <value>ID</value> </property> <property> <name>fs.s3.awsSecretAccessKey</name> <value>SECRET</value> </property>

You can then use URLs to your bucket : s3n://MYBUCKET/, or directories and files inside it.

s3n://BUCKET/ s3n://BUCKET/dir s3n://BUCKET/dir/files.csv.tar.gz s3n://BUCKET/dir/*.gz

Alternatively, you can put the access key ID and the secret access key into a s3n (or s3) URI as the user info:

s3n://ID:SECRET@BUCKET

Note that since the secret access key can contain slashes, you must remember to escape them by replacing each slash / with the string %2F. Keys specified in the URI take precedence over any specified using the properties fs.s3.awsAccessKeyId and fs.s3.awsSecretAccessKey.

This

option is less secure as the URLs are likely to appear in output logs

and error messages, so being exposed to remote users.

Security

Your Amazon Secret Access Key is that: secret. If it gets known you have to go to the Security Credentials page and revoke it. Try and avoid printing it in logs, or checking the XML configuration files into revision control.

Running bulk copies in and out of S3

Support for the S3 block filesystem was added to the ${HADOOP_HOME}/bin/hadoop distcp tool in Hadoop 0.11.0 (See HADOOP-862). The distcp tool sets up a MapReduce job to run the copy. Using distcp,

a cluster of many members can copy lots of data quickly. The number of

map tasks is calculated by counting the number of files in the source:

i.e. each map task is responsible for the copying one file. Source and

target may refer to disparate filesystem types. For example, source

might refer to the local filesystem or hdfs with S3 as the target.

The distcp tool is useful for quickly prepping S3 for MapReduce jobs that use S3 for input or for backing up the content of hdfs.

Here is an example copying a nutch segment named 0070206153839-1998 at /user/nutch in hdfs to an S3 bucket named 'nutch' (Let the S3 AWS_ACCESS_KEY_ID be 123 and the S3 AWS_ACCESS_KEY_SECRET be 456):

% ${HADOOP_HOME}/bin/hadoop distcp hdfs://domU-12-31-33-00-02-DF:9001/user/nutch/0070206153839-1998 s3://123:456@nutch/

Flip the arguments if you want to run the copy in the opposite direction.

Other schemes supported by distcp are file (for local), and http. Monday 26 January 2015

An Introduction to the AWS Command Line Tool

Amazon Web Services has an extremely functional and easy to use web console called the AWS Management Console.

It’s brilliant for performing complex tasks on your AWS infrastructure,

although as a Linux sysadmin, you may want something more

"console" friendly.

In early September 2013, Amazon released version 1.0 of awscli, a powerful command line interface which can be used to manage AWS services.

In this two-part series, I’ll provide some working examples of how to use awscli to provision a few AWS services. (See An Introduction to the AWS Command Line Tool Part 2.)

We’ll be working with services that fall under the AWS Free Usage Tier. Please ensure you understand AWS pricing before proceeding.

In this two-part series, I’ll provide some working examples of how to use awscli to provision a few AWS services. (See An Introduction to the AWS Command Line Tool Part 2.)

We’ll be working with services that fall under the AWS Free Usage Tier. Please ensure you understand AWS pricing before proceeding.

For those unfamiliar with AWS and wanting to know a bit more, Amazon has excellent documentation on introductory topics.

Ensure you have a relatively current version of Python and an AWS account to be able to use awscli.

The regions are:

The json format is best suited to handling the output of awscli programmatically with tools like jq. The text format works well with traditional Unix tools such as grep, sed and awk.

If you’re behind a proxy, awscli understands the HTTP_PROXY and HTTPS_PROXY environment variables.

awscli understands that we may not just want to stick to a single region.

Ec2 servers allow the administrator to import a SSH key. As there is no physical console that we can attach to for Ec2, SSH is the only default option we have for accessing a server.

The public SSH key is stored within AWS. You are free to allow AWS to generate the public and private keys or generate the keys yourself.

We’ll proceed by generating the keys ourselves.

Let’s create a new Security Group and open up port 22/tcp to our workstation's external IP address. Security Groups act as firewalls that we can configure to control inbound and outbound traffic to our Ec2 instance.

I generally rely on ifconfig.me to quickly provide me with my external IP address.

AMI IDs for images differ between regions. We can use describe-images to determine the AMI ID for Amazon Linux AMI 2013.09.2 which was released on 2013-12-12.

The name for this AMI is amzn-ami-pv-2013.09.2.x86_64-ebs with the owner being amazon.

What we’re interested in finding is the value for ImageId. If you are connected to the ap-southeast-2 region, that value is ami-5ba83761.

Amazon's awscli is a powerful command line interface that can be used to manage AWS services.

For those unfamiliar with AWS and wanting to know a bit more, Amazon has excellent documentation on introductory topics.

Ensure you have a relatively current version of Python and an AWS account to be able to use awscli.

Installation & Configuration

Install awscli using pip. If you’d like to have awscli installed in an isolated Python environment, first check out virtualenv.$ pip install awscliNext, configure awscli to create the required ~/.aws/config file.

$ aws configureIt’s up to you which region you’d like to use, although keep in mind that generally the closer the region to your internet connection the less latency you will experience.

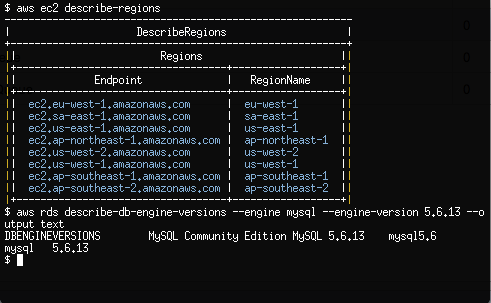

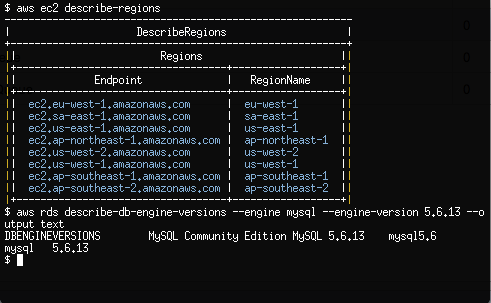

The regions are:

For now, choose table as the Default output format. table provides pretty output which is very easy to read and understand, especially if you’re just getting started with AWS.

- ap-northeast-1

- ap-southeast-1

- ap-southeast-2

- eu-west-1

- sa-east-1

- us-east-1

- us-west-1

- us-west-2

The json format is best suited to handling the output of awscli programmatically with tools like jq. The text format works well with traditional Unix tools such as grep, sed and awk.

If you’re behind a proxy, awscli understands the HTTP_PROXY and HTTPS_PROXY environment variables.

First Steps

So moving on, let’s perform our first connection to AWS.$ aws ec2 describe-regionsA table should be produced showing the Endpoint and RegionName fields of the AWS regions that support Ec2.

$ aws ec2 describe-availability-zonesThe output from describe-availability-zones should be that of the AWS Availability Zones for our configured region.

awscli understands that we may not just want to stick to a single region.

$ aws ec2 describe-availability-zones --region us-west-2By passing the —region argument, we change the region that awscli queries from the default we have configured with the aws configure command.

Provisioning an Ec2 Instance

Let’s go ahead and start building our first Ec2 server using awscli.Ec2 servers allow the administrator to import a SSH key. As there is no physical console that we can attach to for Ec2, SSH is the only default option we have for accessing a server.

The public SSH key is stored within AWS. You are free to allow AWS to generate the public and private keys or generate the keys yourself.

We’ll proceed by generating the keys ourselves.

$ ssh-keygen -t rsa -f ~/.ssh/ec2 -b 4096After supplying a complex passphrase, we’re ready to upload our new SSH public key into AWS.

$ aws ec2 import-key-pair --key-name my-ec2-key \

--public-key-material "$(cat ~/.ssh/ec2.pub)"

The —public-key-material option takes the actual public key, not the path to the public key.Let’s create a new Security Group and open up port 22/tcp to our workstation's external IP address. Security Groups act as firewalls that we can configure to control inbound and outbound traffic to our Ec2 instance.

I generally rely on ifconfig.me to quickly provide me with my external IP address.

$ curl ifconfig.me 198.51.100.100Now we know the external IP address of our workstation, we can go ahead and create the Security Group with the appropriate inbound rule.

$ aws ec2 create-security-group \

--group-name MySecurityGroupSSHOnly \

--description "Inbound SSH only from my IP address"

$ aws ec2 authorize-security-group-ingress \

--group-name MySecurityGroupSSHOnly \

--cidr 198.51.100.100/32 \

--protocol tcp --port 22

We need to know the Amazon Machine Image (AMI) ID for the Linux Ec2 machine we are going to provision. If you already have an image-id then you can skip the next command.AMI IDs for images differ between regions. We can use describe-images to determine the AMI ID for Amazon Linux AMI 2013.09.2 which was released on 2013-12-12.

The name for this AMI is amzn-ami-pv-2013.09.2.x86_64-ebs with the owner being amazon.

$ aws ec2 describe-images --owners amazon \

--filters Name=name,Values=amzn-ami-pv-2013.09.2.x86_64-ebs

We’ve combined —owners and applied the name filter which produces some important details on the AMI.What we’re interested in finding is the value for ImageId. If you are connected to the ap-southeast-2 region, that value is ami-5ba83761.

$ aws ec2 run-instances --image-id ami-5ba83761 \

--key-name my-ec2-key --instance-type t1.micro \

--security-groups MySecurityGroupSSHOnly

run-instances creates 1 or more Ec2 instances and should output a lot of data.If run-instances is successful, we should now have an Ec2 instance booting.

- InstanceId: This is the Ec2 instance id which we will use to reference this newly provisioned machine with all future awscli commands.

- InstanceType: The type of the instance represents the set combination of CPU, memory, storage and networking capacity that this Ec2 instance has. t1.micro is the smallest instance type available and for new AWS customers is within the AWS Free Usage Tier.

- PublicDnsName: The DNS record that is automatically created by AWS when we provisioned a new server. This DNS record resolves to the external IP address which is found under PublicIpAddress.

- GroupId under SecurityGroups: the AWS Security Group that the Ec2 instance is associated with.

$ aws ec2 describe-instancesWithin a few seconds, the Ec2 instance will be provisioned and you should be able to SSH as the user ec2-user. From the output of describe-instances, the value of PublicDnsName is the external hostname for the Ec2 instance which we can use for SSH. Once your SSH connection has been established, you can use sudo to become root.

$ ssh -i ~/.ssh/ec2 -l ec2-user \

ec2-203-0-113-100.ap-southeast-2.compute.amazonaws.com

A useful awscli feature is get-console-output which allows us to view the Linux console of an instance shortly after the instance boots. You will have to pipe the output of get-console-output into sed to correct line feeds and carriage returns.$ aws ec2 get-console-output --instance-id i-0d9c2b31 \

| sed 's/\\n/\n/g' | sed 's/\\r/\r/g'

Wednesday 14 January 2015

Storing Apache Hadoop Data on the Cloud - HDFS vs. S3

Storing Apache Hadoop Data on the Cloud - HDFS vs. S3

Ken and Ryu are both the best of friends and the greatest

of rivals in the Street Fighter game series. When it comes to Hadoop

data storage on the cloud though, the rivalry lies between Hadoop

Distributed File System (HDFS) and Amazon’s Simple Storage Service (S3).

Although Apache Hadoop traditionally works with HDFS, it can also use

S3 since it meets Hadoop’s file system requirements. Netflix’ Hadoop data warehouse

utilizes this feature and stores data on S3 rather than HDFS. Why did

Netflix choose this data architecture? To understand their motives let’s

see how HDFS and S3 do... in battle!Round 1 - Scalability

HDFS relies on local storage that scales horizontally.

Increasing storage space means either adding larger hard drives to

existing nodes or adding more machines to the cluster. This is feasible,

but more costly and complicated than S3.

S3 automatically scales according

to the stored data, no need to change anything. Even the space available

is practically infinite (no limit from Amazon at least).First round goes to S3

Round 2 - Durability

A statistical model for HDFS data durability

suggests that the probability of losing a block of data (64MB by

default) on a large 4,000 node cluster (16 PB total storage, 250,736,598

block replicas) is 5.7x10-7 in the next 24 hours and 2.1x10-4 in the

next 365 days. However, for most clusters, which contain only a few

dozen instances, the probability of losing data can be much higher.

S3 provides a durability of

99.999999999% of objects per year, meaning that a single object could be

lost per 10,000 objects once every 10,000,000 years (see the S3 FAQ).

It gets even better. About a year and a half ago one of my colleagues

at Xplenty took an AWS workshop at Amazon. Their representatives claimed

that they haven’t actually lost a single object in the default S3

storage (a cheaper option of Reduced Redundancy Storage (RRS) is

available with a durability of only 99.99%).Large clusters may have excellent durability, but in most cases S3 is more durable than HDFS.

S3 wins again

Round 3 - Persistence

Data doesn’t persist when stopping EC2 or EMR instances. Nonetheless, costly EBS volumes can be used to persist the data on EC2.

On S3 the data always persists.S3 wins

Round 4 - Price

To keep data integrity, HDFS stores by default three copies

of each block of data. This means that the storage space needed by HDFS

is 3 times than the actual data and costs accordingly. Although the

data replication is not mandatory, storing just one copy would eliminate

the durability of HDFS and could result in loss of data.

Amazon take care of backing up the

data on S3, so 100% of the space is available and paid for. S3 also

supports storing compressed files which considerably reduces the space

needed as well as the bill.Winner - S3

Round 5 - Performance

HDFS performance is great. The data is stored and processed on the same machines which improves access and processing speed.

Unfortunately S3 doesn’t perform as well as HDFS. The

latency is obviously higher and the data throughput is lower. However,

jobs on Hadoop are usually made of chains of map-reduce jobs and

intermediate data is stored into HDFS and the local file system so other

than reading from/writing to Amazon S3 you get the throughput of the

nodes' local disks.

We ran some tests recently with

TestDFSIO, a read/write test utility for Hadoop, on a cluster of

m1.xlarge instances with four ephemeral disk devices per node. The

results confirm that HDFS performs better:| HDFS on Ephemeral Storage | Amazon S3 | |

|---|---|---|

| Read | 350 mbps/node | 120 mbps/node |

| Write | 200 mbps/node | 100 mbps/node |

Round 6 - Security

Some people think that HDFS is not secure, but that’s not

true. Hadoop provides user authentication via Kerberos and authorization

via file system permissions. YARN, Hadoop’s latest version, takes it

even further with a new feature called federations - dividing a cluster

into several namespaces which prevents users from accessing data that

does not belong to them. Data can be uploaded to the amazon instances

securely via SSL.

S3 has built-in security. It

supports user authentication to control who has access to the data,

which at first only the bucket and object owners do. Further permissions

can be granted to users and groups via bucket policies and Access

Control Lists (ACL). Data can be encrypted and uploaded securely via

SSL.It’s a tie

Round 7 - Limitations

Even though HDFS can store files of any size, it has issues storing really small files (they should be concatenated or unified to Hadoop Archives).

Also, data saved on a certain cluster is only available to machines on

that cluster and cannot be used by instances outside the cluster.

That’s not the case with S3. The

data is independent of Hadoop clusters and can be processed by any

number of clusters simultaneously. However, files on S3 have several

limitations. They can have a maximum size of only 5GB and additional

Hadoop storage formats, such as Parquet or ORC,

cannot be used on S3. That’s because Hadoop needs to access particular

bytes in these files, an ability that’s not provided by S3.Another tie

Tonight’s Winner

With better scalability, built-in persistence, and lower

prices, S3 is tonight’s winner! Nonetheless, for better performance and

no file sizes or storage formats limitations, HDFS is the way to go.

Subscribe to:

Posts (Atom)