Centos ,rmr rhadoop, hadoop streaming failed with error code 1

b=mapreduce(input=a,map=function(k,v) keyval(v,v^2))

packageJobJar: [/tmp/Rtmp8skozB/rmr-local-env, /tmp/Rtmp8skozB/rmr-global-env, /tmp/Rtmp8skozB/rhstr.map14f121ad6582, /var/lib/hadoop-0.20/cache/root/hadoop-unjar8292210935392046645/] [] /tmp/streamjob4219155535407733796.jar tmpDir=null

12/06/27 11:31:45 INFO mapred.FileInputFormat: Total input paths to process : 1

12/06/27 11:31:46 INFO streaming.StreamJob: getLocalDirs(): [/var/lib/hadoop-0.20/cache/root/mapred/local]

12/06/27 11:31:46 INFO streaming.StreamJob: Running job: job_201206271130_0001

12/06/27 11:31:46 INFO streaming.StreamJob: To kill this job, run:

12/06/27 11:31:46 INFO streaming.StreamJob: /usr/lib/hadoop/bin/hadoop job -Dmapred.job.tracker=localhost:8021 -kill job_201206271130_0001

12/06/27 11:31:46 INFO streaming.StreamJob: Tracking URL: http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201206271130_0001

12/06/27 11:31:47 INFO streaming.StreamJob: map 0% reduce 0%

12/06/27 11:32:07 INFO streaming.StreamJob: map 100% reduce 100%

12/06/27 11:32:07 INFO streaming.StreamJob: To kill this job, run:

12/06/27 11:32:07 INFO streaming.StreamJob: /usr/lib/hadoop/bin/hadoop job -Dmapred.job.tracker=localhost:8021 -kill job_201206271130_0001

12/06/27 11:32:07 INFO streaming.StreamJob: Tracking URL: http://localhost.localdomain:50030/jobdetails.jsp?jobid=job_201206271130_0001

12/06/27 11:32:07 ERROR streaming.StreamJob: Job not successful. Error: NA

12/06/27 11:32:07 INFO streaming.StreamJob: killJob...

Streaming Command Failed!

Error in mr(map = map, reduce = reduce, reduce.on.data.frame = reduce.on.data.frame, :

hadoop streaming failed with error code 1

I checked that 3 environments requried are all set ,

the error is very general, then I checked the jobtracker/tasktracker log.

Caused by: java.lang.RuntimeException: configuration exception

at org.apache.hadoop.streaming.PipeMapRed.configure(PipeMapRed.java:230)

at org.apache.hadoop.streaming.PipeMapper.configure(PipeMapper.java:66)

... 22 more

Caused by: java.io.IOException: Cannot run program "Rscript": java.io.IOException: error=2, No such file or directory

at java.lang.ProcessBuilder.start(ProcessBuilder.java:460)

at org.apache.hadoop.streaming.PipeMapRed.configure(PipeMapRed.java:214)

... 23 more

Caused by: java.io.IOException: java.io.IOException: error=2, No such file or directory

at java.lang.UNIXProcess.<init>(UNIXProcess.java:148)

at java.lang.ProcessImpl.start(ProcessImpl.java:65)

at java.lang.ProcessBuilder.start(ProcessBuilder.java:453)

... 24 more

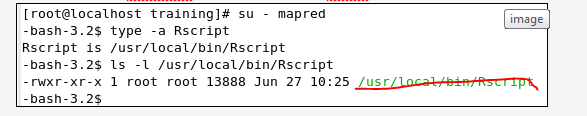

it looks like I try run the Rscript, but failed, so for my first impression, it could be a permission issue.

then I run as the mapreduce user, try to locate the Rscript. all are ok, the permission are ok.

everyone has the execute access, so could it be a path issue? so I just give a try, make a link under /usr/bin/Rscript. then, all works. super!

No comments:

Post a Comment