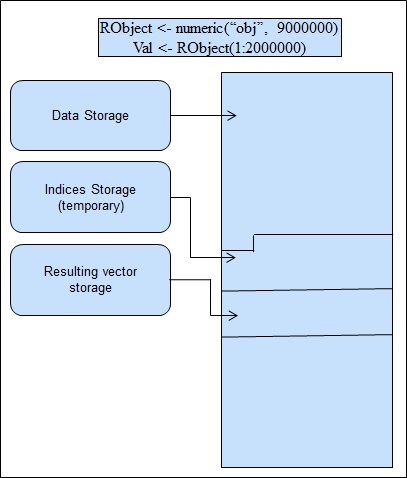

Native R stores everything into RAM. For more, please visit R memory management. R objects can take memory upto 2-4 GB, depends on hardware configuration. Beyond this, it returns “Error: cannot allocate vector of size ……” and leaving us handicapped to work with big data using R.

Data Storage with standard R Object

Thanks to R open source, group of scholars who continuously strives in creating R packages which help us to work effectively with big data.

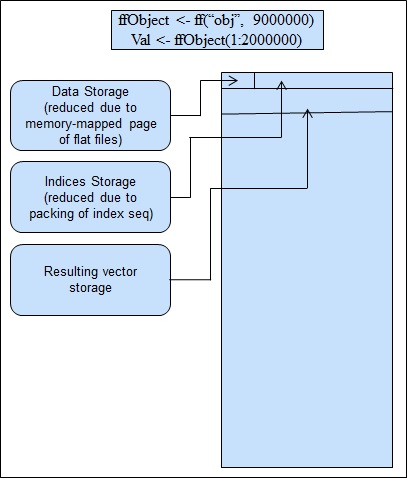

ff package developed by Daniel Adler, Christian Gläser, Oleg Nenadic, Jens Oehlschlägel, Walter Zucchini and maintained by Jens Oehlschlägel is designed to overcome this limitation. It uses other media like hard disk, CD and DVD to store the native binary flat files rather than its memory. It also allows you to work on very large data file simultaneously. It reads the data files into chunk and write that chunk into the external drive.

Data Storage with ff

Read csv file using ff package

>options(fftempdir = [Provide path where you want to store binary files])

>file_chunks <- read.csv.ffdf(file=”big_data.csv”, header=T, sep=”,”, VERBOSE=T, next.rows=500000, colClasses=NA)

It read big_data csv file chunk by chunk as specified in next.rows. It reads the chunks and write binary files in any external media and store the pointer of file in RAM. It perform this step until csv file left with no chunks.

Functioning of ff package

In the same way, we can write csv or other flat files in chunk. It

reads chunk by chunk from HDD or any other external media and write it

into csv or other supported format.>write.csv.ffdf(File_chunks, “file_name.csv”)

ff provides us the facility with ffbase package to implement all sorts of functions like joins, aggregations, slicing and dicing.

>Merged_data = merge(ffobject1, ffobject2, by.x=c(“Col1″, “Col2″), by.y=c(“Col1″,”Col2″), trace=T)

Merge function of ff, ffbase package works similar as it worked for data frame but it allows inner and left join only.

>library(“doBy”)

>AggregatedData = ffdfdply(ffobject, split=as.character(ffobject$Col1), FUN=function(x) summaryBy(Col3+Col4+Col5 ~ Col1, data=x, FUN=sum))

To perform aggregation, I used summaryBy function which is available under doBy package. In the above ffdfdply function we split the data on the basis of some key column. If key column contains combination of 2 or more fields, we can generate key columns using ikey function

>ffobject$KeyColumn <- ikey(ffobject[c("Col1","Col2","Col3")])

With all sorts of advantages like working with big data and less dependency on RAM, ff has few limitations, such as

1. Sometimes, we need to compromise with the speed when we are performing complex operations with huge data set.

2. Development is not easier using ff.

3. Need to care about flat files that stores in the disk otherwise your HDD or external media left with little or no space.

#####################

Opening Large CSV Files in R

Before heading home for the holidays, I had a large data set (1.6 GB with over 1.25 million rows) with columns of text and integers ripped out of the company (Kwelia) Database and put into a .csv file since I was going to be offline a lot over the break. I tried opening the csv file in the usual way:However it never finished even after letting it go all night. I also tried reading it into a SQLlite database first and reading it out of that, but the file was so messy it kept coming back with errors. I finally got it read in to R by using the ff package and the following code:

Latest Jobs in Fashion Industry in Delhi, NCR | 9250406614

ReplyDelete

DeleteChatGPT

In R, the ff and ffbase packages are powerful tools for performing big data analysis, especially when dealing with datasets that are too large to fit into memory. Big Data Projects For Final Year These packages allow for efficient handling, manipulation, and analysis of large-scale data using file-backed data structures. Machine Learning Projects for Final Year

It’s great to come across a blog every once in a while that isn’t the same out of date rehashed

ReplyDeletematerial. Fantastic read.

Java Training in Chennai |Best

Java Training in Chennai

C C++ Training

in Chennai |Best C C++ Training Institute in Chennai

Data science Course

Training in Chennai |Best Data Science Training Institute in Chennai

RPA Course

Training in Chennai |Best RPA Training Institute in Chennai

AWS Course Training

in Chennai |Best AWS Training Institute in Chennai

Devops Course Training

in Chennai |Best Devops Training Institute in Chennai

Selenium Course Training in

Chennai |Best Selenium Training Institute in Chennai

Java Course Training in Chennai |

Best Java Training Institute in Chennai

Hello, I read your blog occasionally, and I own a similar one, and I was just wondering if you get a lot of spam remarks? If so how do you stop it, any plugin or anything you can advise? I get so much lately it’s driving me insane, so any assistance is very much appreciated.

ReplyDeleteMachine Learning Training in Chennai | Machine Learning Training Institute in Chennai

Devops Training in Chennai | Devops Training Institute in Chennai

Data Science Training in Chennai | Data Science Course in Chennai

Selenium Training in Chennai | Selenium Training Institute in Chennai

Blue Prism Training in Chennai | Blue Prism Training Institute in Chennai

PHP Training in Chennai | PHP Training Institute in Chennai

I am impressed by the information that you have on this blog. It shows how well you understand this subject.

ReplyDeleteData Analytics Courses

Nice Post Thanks For Sharing

ReplyDeleteBig data analysis

I have been searching to find a comfort or effective procedure to complete this process and I think this is the most suitable way to do it effectively.

ReplyDeletemachine learning course in bangalore

This comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteohh what a great topic. It help me a lot.

ReplyDeleteYou will get an introduction to the Python programming language and understand the importance of it. How to download and work with Python along with all the basics of Anaconda will be taught. You will also get a clear idea of downloading the various Python libraries and how to use them.

Topics

About ExcelR Solutions and Innodatatics

Do's and Don’ts as a participant

Introduction to Python

Installation of Anaconda Python

Difference between Python2 and Python3

Python Environment

Operators

Identifiers

Exception Handling (Error Handling)

This article really compertable for us thaks for shearing

ReplyDeleteThank you for sharing valuable information.

ReplyDeleteYou can also check this links. Hope it can help you and your friend, who is looking for job change.

If you have up to 10 years experience, then you can come for interview round. For more details you can direct call on this number @ +91 9250406614.

It is totally free service. Free Free Free !!

Jd for Group Manager (Oil and Gas research) - Gurgaon

Thank you.

good article Surya Informatics

ReplyDeleteI have to voice my passion for your kindness giving support to those people that should have guidance on this important matter.

ReplyDeleteAI training chennai | AI training class chennai

Cloud computing training | cloud computing class chennai

python training in noida sector 15

ReplyDeletesap sd training center in noida

linux training center in noida sector 62

Email marketing training course in noida sector 15

salesforce training in noida sector 63

openstack training in noida sector 15

digital marketing training center in noida sector 16

ReplyDeleteUi Path training center in Noida

digital marketing training center in noida sector 18

linux training center in noida sector 15

python training in noida sector 62

Email marketing training course in noida sector 62

ReplyDeletedevops training in center noida

blue prism training center in noida

cloud computing training in noida sector 15

hadoop training center in noida

python training in noida sector 15

ReplyDeleteI was basically inspecting through the web filtering for certain data and ran over your blog. I am flabbergasted by the data that you have on this blog. It shows how well you welcome this subject. Bookmarked this page, will return for extra. data science course in jaipur

Offering the most effective collection of knowledge in real-time to students through experts and creating them into industry Python Training in Hyderabad with the help of experts from AI Patasala.

ReplyDeletePython Training Hyderabad